EXACT AI WHEN YOU NEED IT MOST

PiLogic is a dual-use company revolutionizing aerospace by delivering precise, efficient, and mathematically grounded AI solutions. We provide targeted models with unparalleled accuracy and speed.

We’re building Targeted AI.

Efficient AI. Precise AI.

“We don't do what LLMs do. You can ask an LLM virtually any question, and it’ll give back an intelligent answer. The tradeoff is that the process is computationally expensive, and the answers to precise questions are not precise enough for key aerospace applications…

…That’s where we’re focusing. One of our models answers just the questions for which it was built, in a particular domain. It’s orders of magnitude less computationally expensive than a large ML model. And it’s accurate and precise.

Our models produce answers that are mathematically grounded in probability theory. Domain experts can inject knowledge to enhance effectiveness, set hard constraints on model behavior, and analyze such behavior. And we do all this in a way that scales.

All this makes our models perfect for critical dual-use aerospace applications, like tracking, diagnosis, and sensor fusion.”

Mark Chavira

Founder & CTO

Former director of AI at Google

PiLogic’s Platform Solutions

-

Diagnostics

Powers high-precision electrical diagnostics by modeling complex systems. The models isolate faults, predict failures and remediate faults in milliseconds.

-

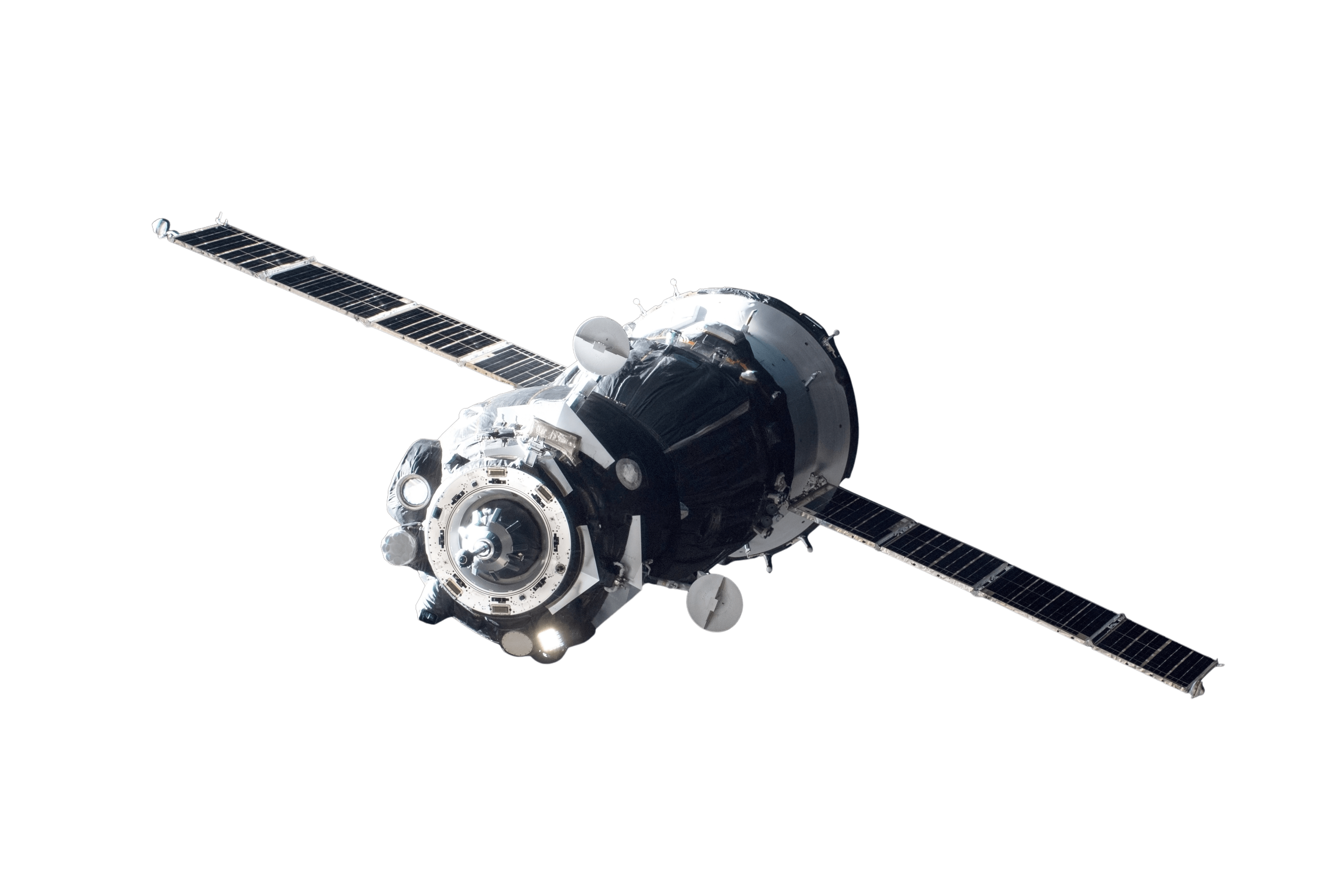

Radar

Rapid analysis of radar data for real-time applications. The system produces precise object detection, classification, and tracking. Ideal for aerospace, defense, and autonomous systems where rapid and accurate decision-making is mission-critical.

-

Sensor Fusion

Fuses diverse sensor readings into coherent, high-confidence insights. The platform resolves uncertainties and discrepancies across multiple sensors, enabling superior diagnostics, tracking, and decision-making in dynamic environments.

PiLogic’s Advantages

-

Accurate

Precise Answers with zero hallucinations, utilizing Probabilistic Inference.

-

Fast

Up to 10,000x faster than current market leading models.

-

Efficient

Minimal compute required, allowing operation at the edge on all relevant devices.

Backed by Leading investors

Meet the Leadership Team

-

Mark Chavira

Founder & CTO

For 30 years, Mark has built real-time systems, built AI solutions, and managed teams of engineers. He earned a PhD in probabilistic AI, built an inference engine called Ace, and collaborated with NASA to apply AI to diagnosing faults in spacecraft. Mark spent 12 years at Raytheon building real-time avionics operating systems, which emphasized speed, predictability, and reliability.

Mark spent 13 years at Google, where he became an Engineering Director, leading a team of engineers and linguists building probabilistic inference and ML systems that control many aspects of ad targeting and blocking. Mark lives in Los Angeles with his wife and seven children.

-

Johannes Waldstein

Founder & CEO

Johannes has co-founded and led five fast moving startups over the past 20 years that developed new markets for data science and AI innovations in Europe and North America, growing these companies from initial idea to scalable product market fit. He founded Fan.AI, an AI driven adtech company, and led the VC fundraising for the company, raising over $15m from venture investors.

He has a deep interest in automated reasoning and has worked for years with mathematically grounded AI models. He is an endurance athlete, ironman triathlete, and ultra-distance trail runner. He lives in Los Angeles with his wife and five children.

-

Geoff Bough

Founder & CRO

Geoff has spent over 15 years in business development, sales and marketing. He cut his teeth in the startup world as the first US hire for FanDuel, overseeing business development and helping the company secure game-changing partnerships that were the building blocks for what is today the largest online gaming company in America.

Additionally he has held executive roles at Flutter, Caesars and Triller, the latter of which he helped complete a $4B merger. Geoff has also always had an entrepreneurial streak, from investing in early stage companies to running his own branding and marketing agency that secured over $500M in strategic partnerships for clients. Geoff lives in the Bay Area with his wife and two children.

FAQs

-

A Large Language Model (LLM) is produced using a massive dataset. LLMs can respond to and generate natural language text, which makes them useful for a wide range of natural language processing tasks such as text summarization, question answering, and more. PiLogic and Probabilistic Inference employ probability theory to compute probabilities of events. The answers are mathematically grounded and therefore give very exact and precise answers. This is ideal when you can’t afford to get things wrong. Aerospace has many use cases that need exact answers fast.

-

PiLogic has built an inference engine that uses problem structure in novel ways. This distinctive approach has been independently verified as the fastest technique for performing probabilistic inference. Because the model is so computationally efficient, PiLogic does not need enormous amounts of training data and can run on standard CPU hardware. The methods pioneered by PiLogic are a real breakthrough in speed, efficiency, and ability to solve complex problems exactly.

-

PiLogic’s initial focus is on electrical diagnostics, radar, and sensor fusion. The platform and engine do have the capability to tackle additional challenges in the aerospace sector. If you have a challenge that you are looking to solve, please reach out to us.

-

Yes. We are taking on new clients in aerospace.